7.扩展类应用#

chatgpt prompt:请帮我用中文进行全文翻译下面这段英文,并结构化输出为中文文本段落

Expanding is the task of taking a short piece of text, such as a set of instructions or a list of topics, and having the large language model generate a longer piece of text, such as an email or an essay about some topic. There are some great uses of this, such as if you use a large language model as a brainstorming partner. But I just also want to acknowledge that there are some problematic use cases of this, such as if someone were to use it, they generate a large amount of spam.

扩展是将短文本(例如一组指令或主题列表)扩展为较长的文本(例如有关某个主题的电子邮件或文章)的任务。这有一些很好的用途,比如如果你将大型语言模型用作头脑风暴的伙伴。但我也想承认一些有问题的用例,例如如果有人使用它,他们生成大量的垃圾邮件。

So when you use these capabilities of a large language model, please use it only in a responsible way and in a way that helps people. In this video we'll go through an example of how you can use a language model to generate a personalized email based on some information. The email is kind of self-proclaimed to be from an AI bot which as Andrew mentioned is very important.

因此,当你使用大型语言模型的这些功能时,请只在有助于人们的情况下负责任地使用它。在这个视频中,我们将通过一个例子来说明如何使用语言模型基于一些信息生成个性化的电子邮件。这封电子邮件被自称为来自AI机器人,正如安德鲁所提到的那样,这非常重要。

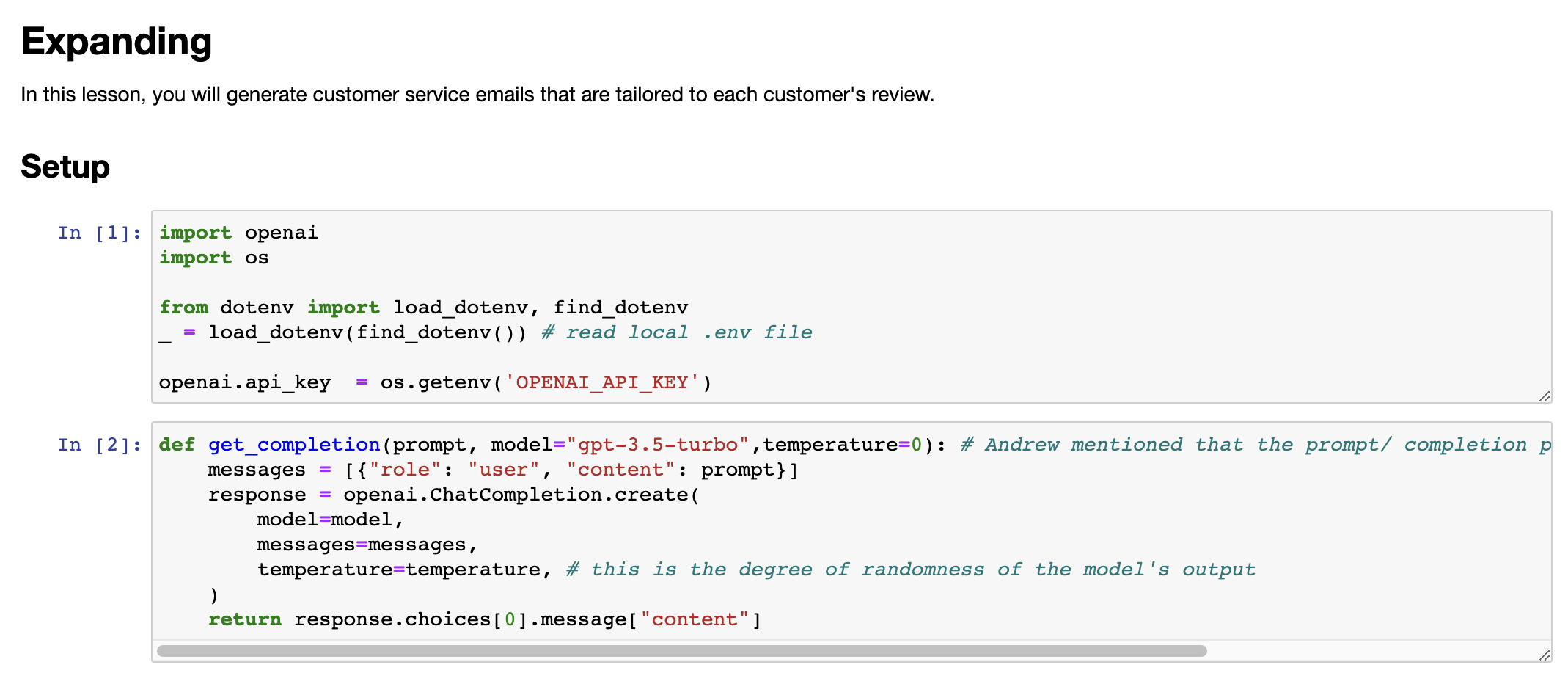

We're also going to use another one of the models input parameters called temperature and this kind of allows you to vary the kind of degree of exploration and variety in the kind of models responses. So let's get into it. So before we get started we're going to kind of do the usual setup. So set up the OpenAI Python package and then also define our helper function getCompletion and now we're going to write a custom email response to a customer review and so given a customer review and the sentiment we're going to generate a custom response.

我们还将使用模型的另一个输入参数,称为温度,这允许你变化模型响应的探索程度和多样性的程度。所以让我们开始吧。在我们开始之前,我们将进行通常的设置。因此,设置OpenAI Python包,然后定义我们的助手函数getCompletion,现在我们要编写一个针对客户评论的自定义电子邮件响应,因此,鉴于客户评论和情感,我们将生成一个自定义响应。

7.1 自定义对客户电子邮件的自动答复#

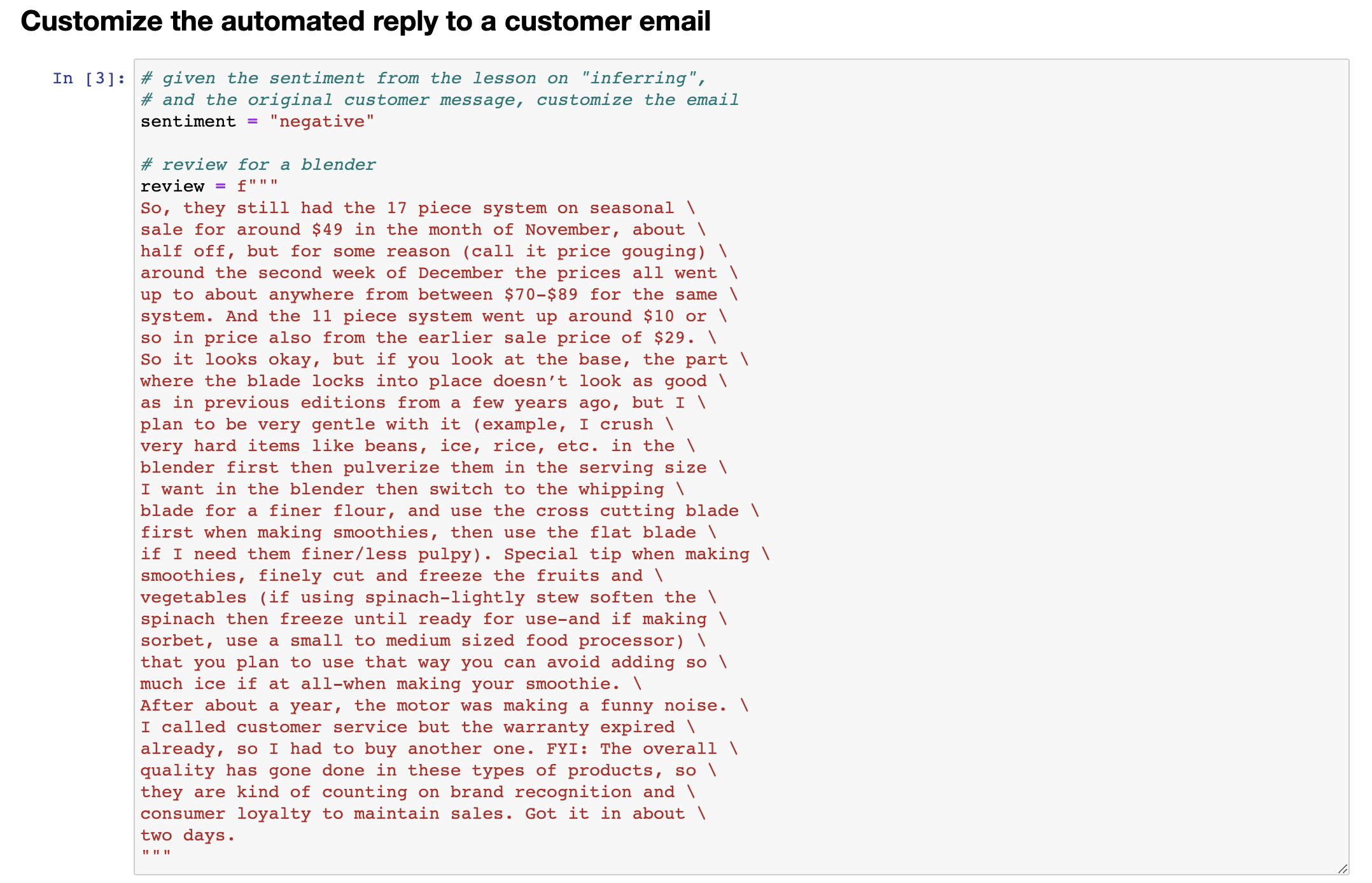

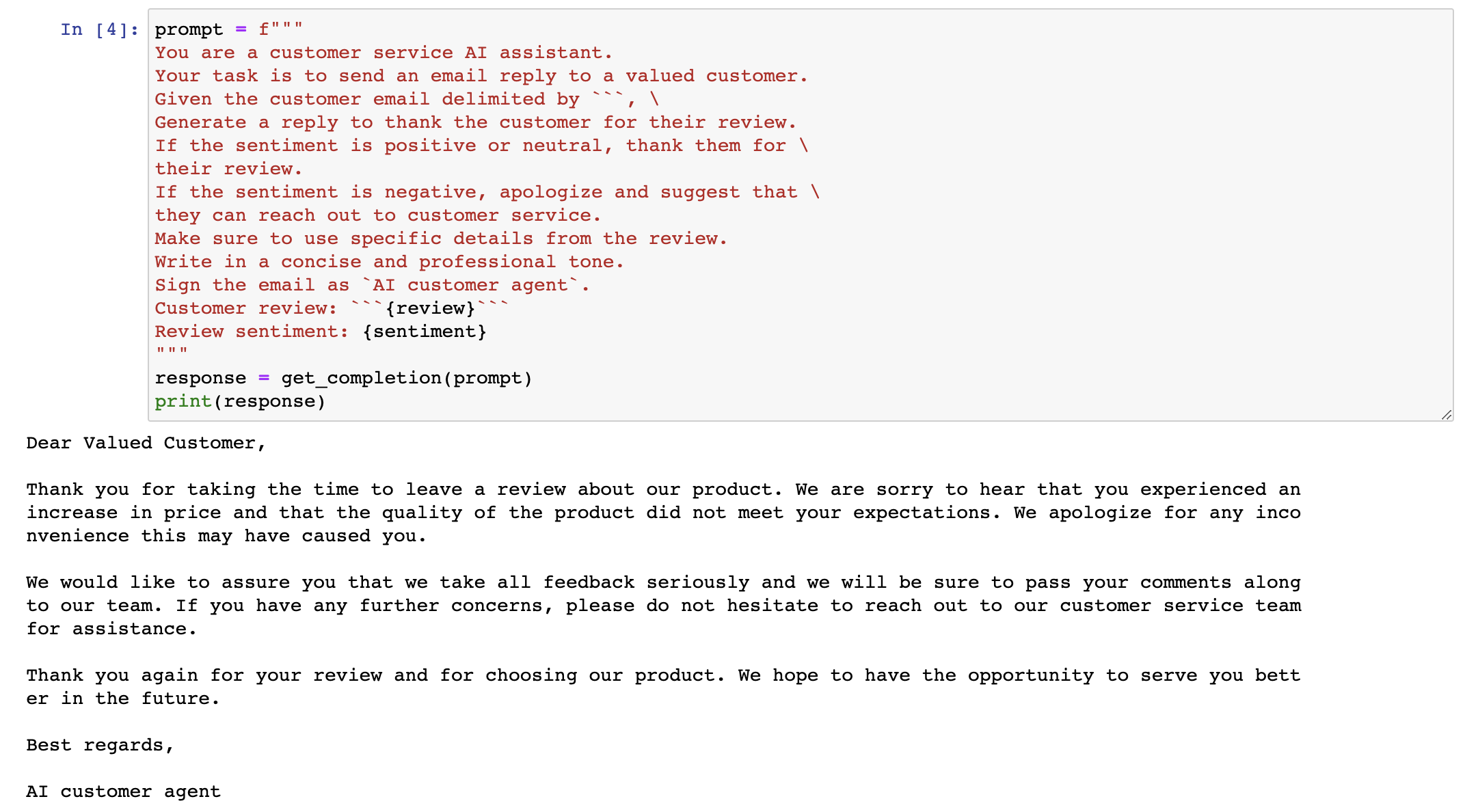

Now we're going to use the language model to generate a custom email to a customer based on a customer review and the sentiment of the review. So we've already extracted the sentiment using the kind of prompts that we saw in the inferring video and then this is the customer review for a blender and now we're going to customize the reply based on the sentiment. And so here the instruction is you are a customer service AI assistant your task is to send an email reply to about your customer given the customer email delimited by three backticks generate a reply to thank the customer for their review.

现在,我们将使用语言模型根据客户评价和评价情感生成一封定制的电子邮件。所以,我们已经使用类似于推断视频中看到的提示提取了情感,这是一款搅拌机的客户评论,现在我们将基于情感定制回复。指令是:作为一个客户服务AI助手,您的任务是发送一封电子邮件答复您的客户,给出分隔符为三个后引号的客户电子邮件并生成一个感谢客户评论的回复。

If the sentiment is positive or neutral thank them for their review. If the sentiment is negative apologize and suggest that they can reach out to customer service. Make sure to use specific details from the review write in a concise and professional tone and sign the email as AI customer agent. And when you're using a language model to generate text that you're going to show to a user it's very important to have this kind of transparency and let the user know that the text they're seeing was generated by AI. And then we'll just input the customer review and the review sentiment. And also note that this part isn't necessarily important because we could actually use this prompt to also extract the review sentiment and then in a follow-up step write the email. But just for the sake of the example, well, we've already extracted the sentiment from the review.

如果情感是积极的或中性的,感谢他们的评论。如果情感是消极的,道歉并建议他们可以联系客户服务。请确保使用评论中的具体细节,用简洁和专业的语气编写并签署成AI客户代理。当您使用语言模型生成要显示给用户的文本时,让用户知道他们看到的文本是由AI生成的,这种透明度非常重要。然后,我们将输入客户评论和评论情感。还要注意,这部分不一定重要,因为我们实际上可以使用此提示来提取评论情感,然后在后续步骤中编写电子邮件。但为了举例说明,我们已经从评论中提取了情感。

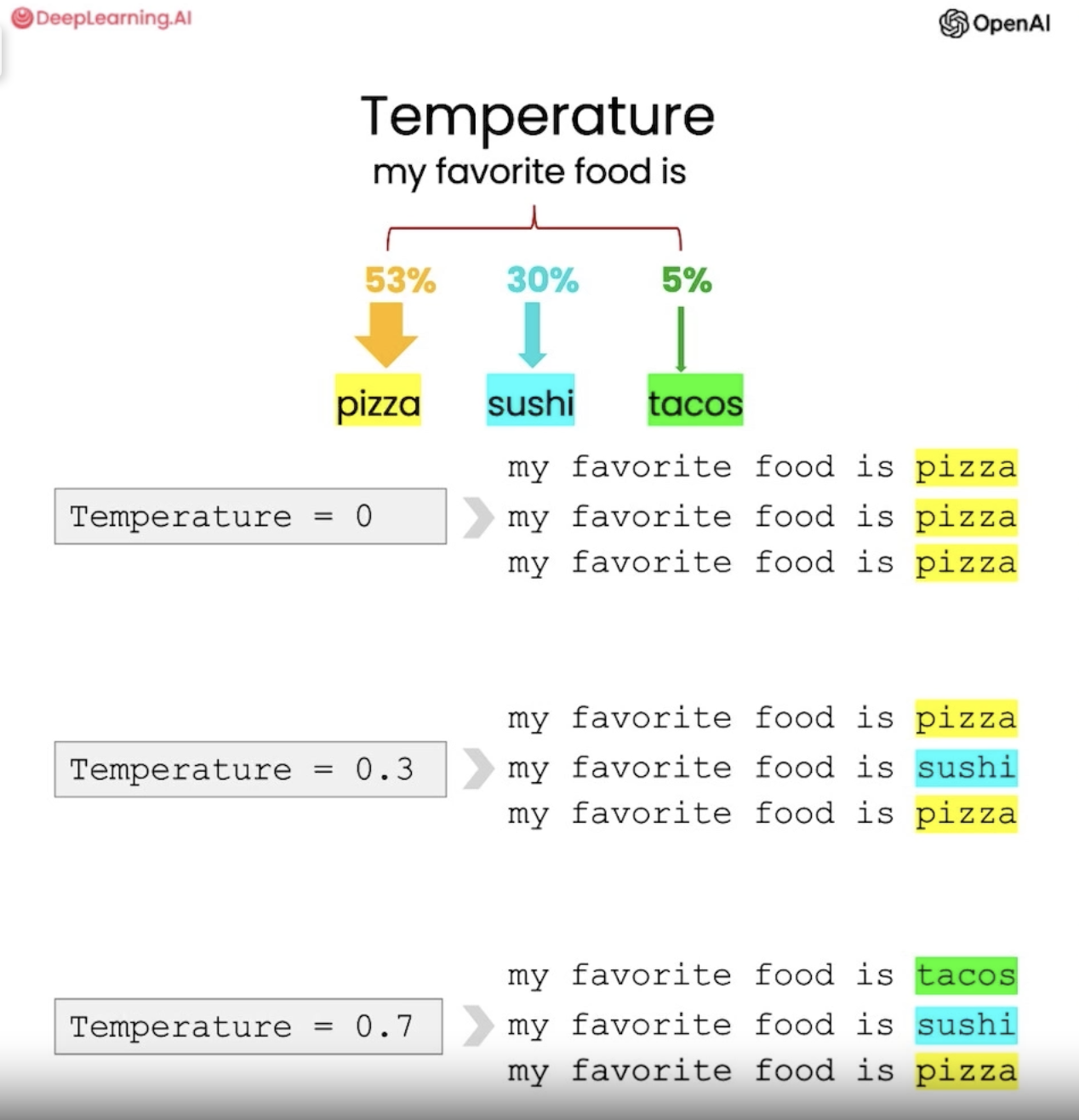

And so, here we have a response to the customer. It kind of addresses details that the customer mentioned in their review. And kind of as we instructed, suggests that they reach out to customer service because this is just an AI customer service agent. Next, we're going to use a parameter of the language model called temperature that will allow us to change the kind of variety of the model's responses. So you can kind of think of temperature as the degree of exploration or kind of randomness of the model. And so, for this particular phrase, my favourite food is the kind of most likely next word that the model predicts is pizza and the kind of next to most likely it suggests are sushi and tacos.

因此,我们给客户做出了回复。回复也解决了客户在评论中提到的细节问题,并且像我们指示的那样建议他们联系客户服务,因为这只是一个AI客户服务代理。接下来,我们将使用语言模型的一个参数称为“温度”,这将允许我们改变模型回答的多样性。因此,您可以将温度看作是模型探索程度或随机性的程度。对于这个特定短语,“我最喜欢的食物”是模型预测的下一个最有可能的词是“比萨”,第二个可能是“寿司”和“塔可”。

And so, at a temperature of zero, the model will always choose the most likely next word, which in this case is pizza, and at a higher temperature, it will kind of also choose one of the less likely words and at an even higher temperature, it might even choose tacos, which only kind of has a five percent chance of being chosen. And you can imagine that kind of, as the model continues this final response, so my favourite food is pizza and it kind of continues to generate more words, this response will kind of diverge from the response, the first response, which is my favourite food is tacos.

因此,在温度为零时,模型总是会选择最有可能的下一个词,这种情况下是“比萨”,而在较高的温度下,它也会选择其中一个可能性较小的词,而在更高的温度下,它甚至可能会选择“塔可”,这种情况下只有五个百分点的机会被选择。您可以想象,随着模型继续生成更多单词,这个最后的回答,“我最喜欢的食物是比萨”,它将会偏离第一个回答“我最喜欢的食物是塔可”。

And so, as the kind of model continues, these two responses will become more and more different. In general, when building applications where you want a kind of predictable response, I would recommend using temperature zero. Throughout all of these videos, we've been using temperature zero and I think that if you're trying to build a system that is reliable and predictable, you should go with this. If you're trying to kind of use the model in a more creative way where you might kind of want a kind of wider variety of different outputs, you might want to use a higher temperature.

因此,随着模型的继续,这两个回答将变得越来越不同。一般来说,在构建需要可预测响应的应用程序时,我建议使用温度为零。在所有这些视频中,我们一直在使用温度为零,如果您想构建一个可靠和可预测的系统,我认为应该选择这个。如果您想以更具创造性的方式使用模型,可能需要更广泛地使用不同的输出,那么您可能需要使用更高的温度。

So, now let's take this same prompt that we just used and let's try generating an email, but let's use a higher temperature. So, in our getCompletion function that we've been using throughout the videos, we have kind of specified a model and then also a temperature, but we've kind of set them to default. So, now let's try varying the temperature. So, we'll use the prompt and then let's try temperature 0.7. And so, with temperature 0, every time you execute the same prompt, you should expect the same completion. Whereas with temperature 0.7, you'll get a different output every time.

现在,让我们使用相同的提示并尝试生成一封电子邮件,但让我们使用更高的温度。在我们一直在使用的getCompletion函数中,我们已经指定了一个模型和温度,但我们已经将它们设置为默认值。现在,让我们尝试改变温度。所以,我们将使用提示,然后尝试温度0.7。因此,使用温度为0时,每次执行相同的提示,您都应该期望相同的完成方式。而温度为0.7时,每次都会得到不同的输出。

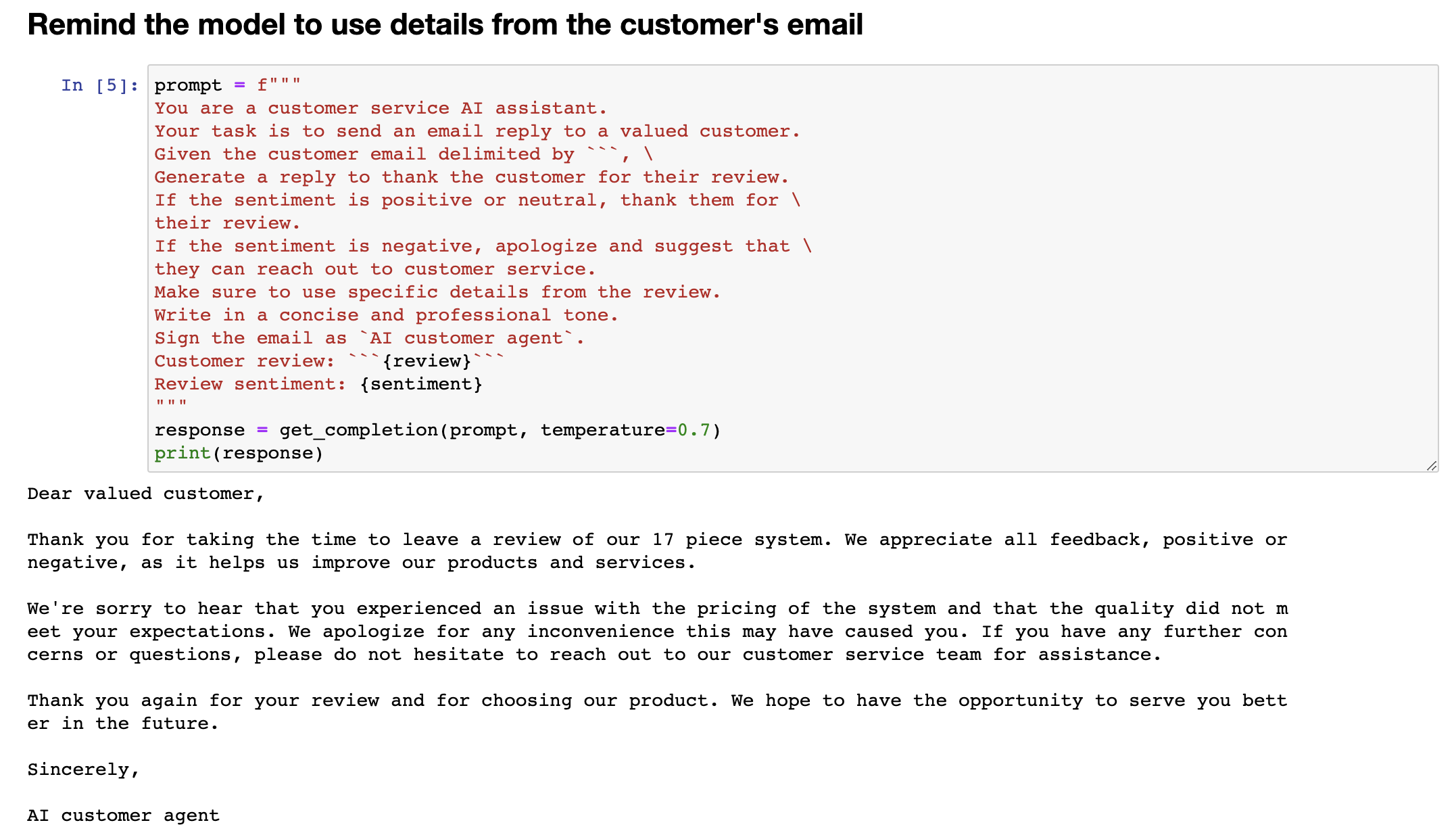

7.2 提醒模型使用客户电子邮件中的详细信息#

So, here we have our email, and as you can see, it's different to the email that we kind of received previously. And let's just execute it again, to show that we'll get a different email again. And here we have another different email. And so, I recommend that you kind of play around with temperature yourself. Maybe you could pause the video now and try this prompt with a variety of different temperatures, just to see how the outputs vary.

所以,这就是我们的电子邮件,你可以看到,它与我们之前收到的电子邮件不同。让我们再次执行它,以展示我们将再次收到不同的电子邮件。在这里,我们又收到了另一个不同的电子邮件。因此,我建议你自己调整一下温度来尝试。也许你可以暂停视频,用不同的温度来尝试这个提示,看看输出结果如何变化。

So, to summarise, at higher temperatures, the outputs from the model are kind of more random. You can almost think of it as that at higher temperatures, the assistant is more distractible, but maybe more creative. In the next video, we're going to talk more about the Chat Completions Endpoint format, and how you can create a custom chatbot using this format.

因此,总结一下,当温度较高时,模型的输出更具随机性。你几乎可以把它看作是在高温下,助手更容易分心,但也许更有创造力。在下一个视频中,我们将更多地谈论聊天完成节点的格式,以及如何使用该格式创建自定义聊天机器人。